笔记:Towards Runtime Monitoring for Responsible Machine Learning using Model-driven Engineering

Towards Runtime Monitoring for Responsible Machine Learning using Model-driven Engineering

Abstraction

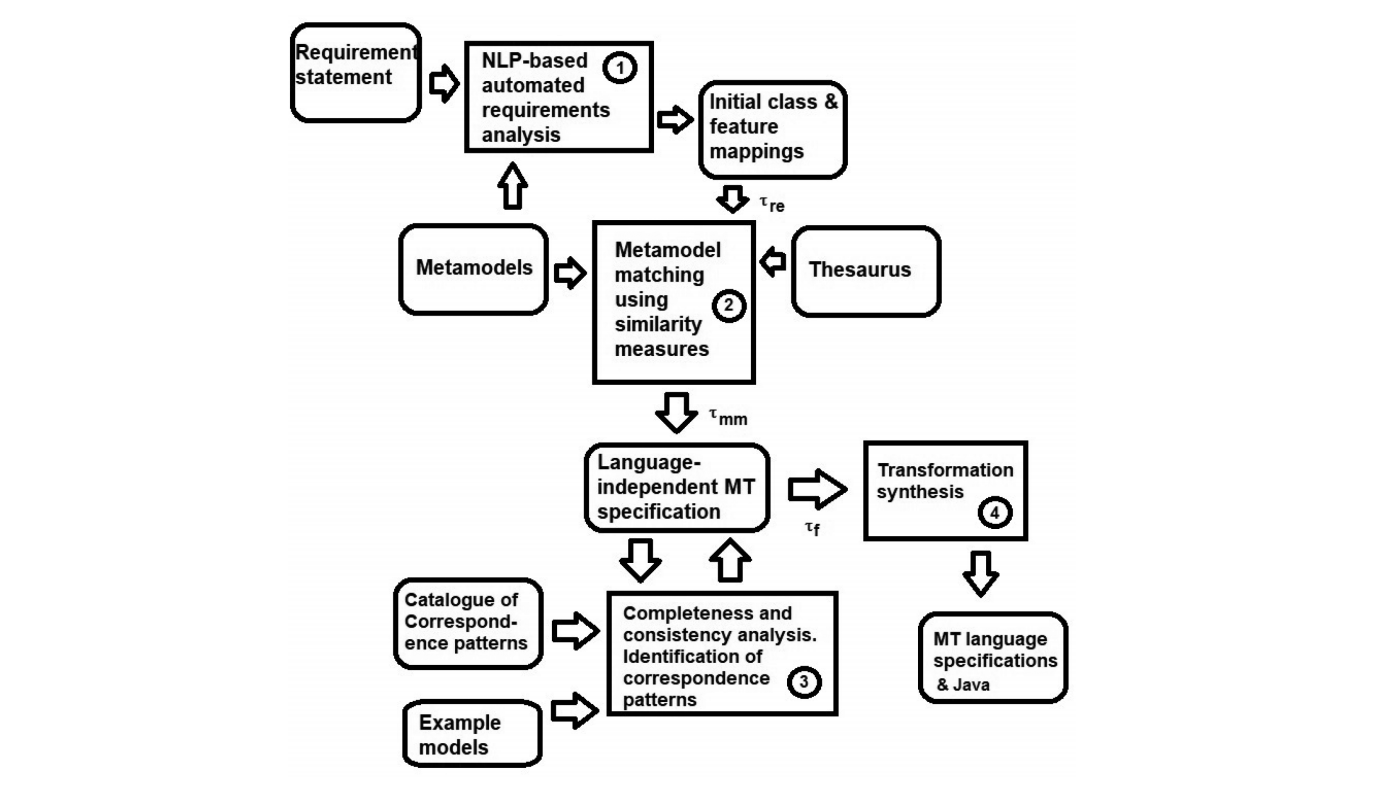

Machine learning (ML) components are used heavily in many current software systems, but developing them responsibly in practice remains challenging. ‘Responsible ML’ refers to developing, deploying and maintaining ML-based systems that adhere to humancentric requirements, such as fairness, privacy, transparency, safety, accessibility, and human values. Meeting these requirements is essential for maintaining public trust and ensuring the success of ML-based systems. However, as changes are likely in production environments and requirements often evolve, design-time quality assurance practices are insufficient to ensure such systems’ responsible behavior. Runtime monitoring approaches for ML-based systems can potentially offer valuable solutions to address this problem. Many currently available ML monitoring solutions overlook human-centric requirements due to a lack of awareness and tool support, the complexity of monitoring human-centric requirements, and the effort required to develop and manage monitors for changing requirements. We believe that many of these challenges can be addressed by model-driven engineering. In this new ideas paper, we present an initial meta-model, model-driven approach, and proof of concept prototype for runtime monitoring of human-centric requirements violations, thereby ensuring responsible ML behavior.We discuss our prototype, current limitations and propose some directions for future work.

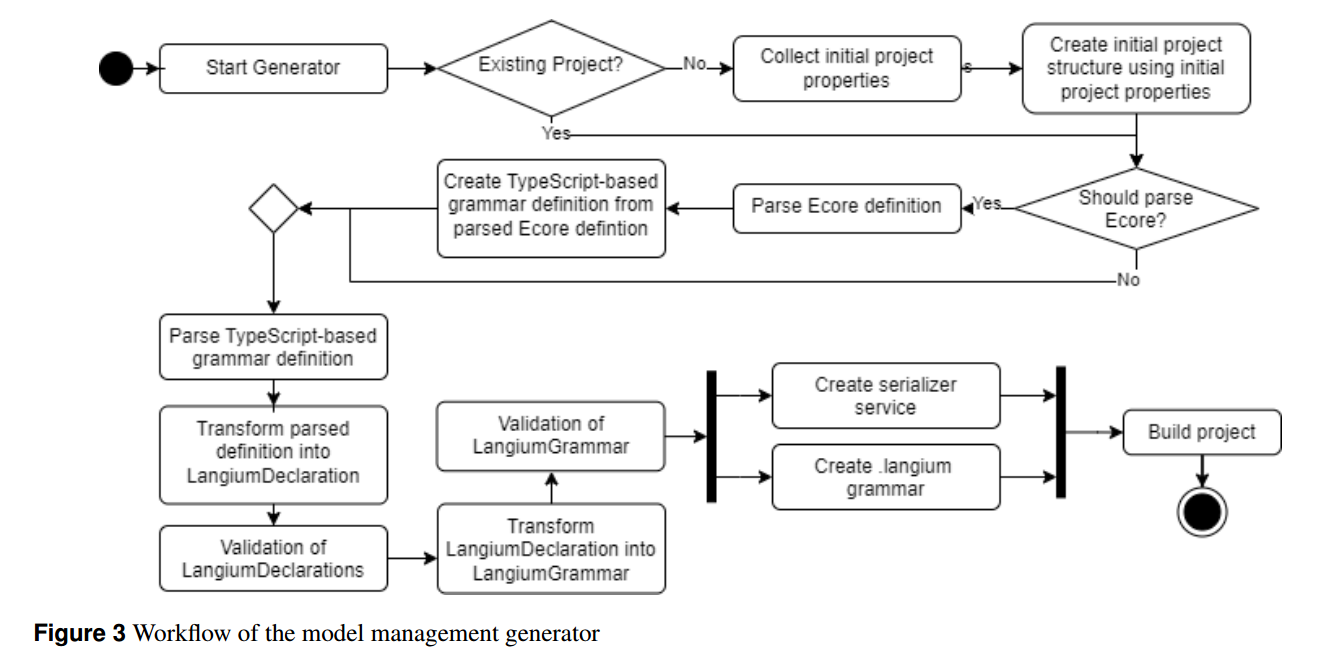

本文做的事情有:设计了一个元模型MoReML,在list123中展示了例子,用EVL做约束+EGL做M2T,一个截图说例子能运行。本文的动机是说:机器学习模型的运行中涉及到人的因素,因此要对人的行为加以约束,以使用基于模型的技术对模型的输入进行限制和警告。想法是好的,但是未免理想,使用MDE的动机也不强,且本文工作量过于少了,很多方面仅是点到为止,实验部分甚至只有一张cmd截图,在MODELS上出现一个正文6页这样的文章也是出乎意料了。